What is Robot txt in SEO? Does Robot txt have some role to play in SEO? If so, what is it, and how can you improve it? Find all here!

Both significant and minor website updates are part of search engine optimization (SEO). The robots.txt file may appear to be a little technical SEO component. But it has a significant effect on the visibility and ranks of your site.

With the explanation of robots.txt, you can see how crucial this file is to the structure and functionality of your website. Find out what is robots txt in SEO and robots.txt best practices in the following section to raise your search engine results page positioning (SERP).

What Is Robot Txt In SEO?

Files called robots.txt, sometimes referred to as the Robots Exclusion Protocol. These are read by search engines and include instructions on allowing or disallowing access to all or specific areas of your website. Search engines like Google or Bing send web crawlers to your website to view its content and gather data for inclusion in search results.

Robots.txt files are typically used to control web crawlers. And are helpful when you do not want these crawlers to reach a specific area of your website.

Robots.txt files are crucial because they inform search engines about the areas they are permitted to scan. What they do is index your website or wholly or partially restrict it.

You should not delete the robots.txt files that are a necessary component of your websites. Unwanted bot visitors seeking to “snoop” through your website’s content can enter and are blocked by them. Now you know what is robots txt in SEO? Now let’s move to the next part.

Why is Robot Txt in SEO Important?

They are essential because:

- They aid in the optimization of the crawl budget because the spider will spend less time crawling a page and only viewing relevant content. “Thank you pages” are an example of a page you would not want Google to find.

- By highlighting the pages, the Robots.txt file is a useful technique to enforce page indexation.

- Certain portions of your site are under the control of robots.txt files.

- Given that you may make different robots.txt files for each root domain, they can protect entire areas of a website. The payment details page, of course, serves as a good illustration.

- Additionally, you have the option to prevent internal search results pages from showing up in search engine results pages.

- Files that should not be indexed, including PDFs and specific images, can be hidden using robots.txt.

How Robots txt Work?

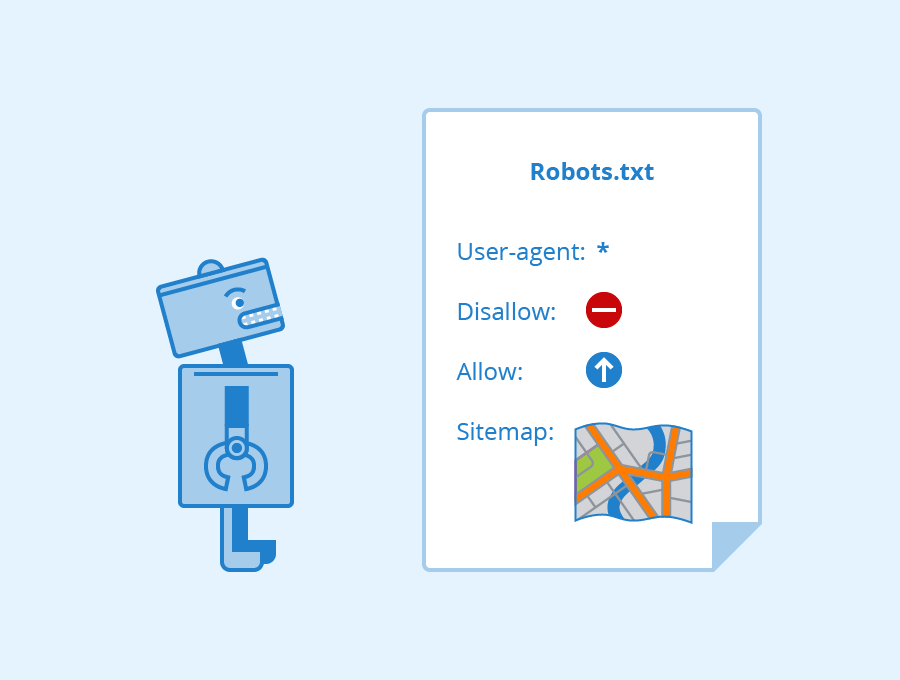

The robots file has a pretty straightforward layout. There are several pre-established keyword/value combinations that you can utilize. Multiple ‘directives’ sections, each beginning with a user-agent, make up a robots.txt file.

These are the most common: User-agent, Disallow, Allow, Crawl-delay, and Sitemap.

User-Agent

On digital platforms, various search engines are utilized as the User-agent to access and index the content of your website. In SEO, one of the agents that control their ability to crawl is called User-Agent.

As a result, you may be pretty specific about which search engines are allowed to crawl your website. And also show its material online.

Allow

With this directive, you can tell the Google bots to visit and crawl specific pages or directories.

When this command is issued, the bots can access the page or subfolder even when the primary folder or page they are on is restricted.

Disallow

In the robots.txt file of a website, the disallow directive instructs search engines not to crawl a particular page. A page will typically not appear in search results because of this. With this directive, you can tell search engines to ignore the documents. And also the files located in a particular folder that you have hidden from the public.

Crawl-Delay

The majority of directives simply tell search engine crawlers to permit or deny access to specific locations on a page or directory. In contrast, the primary purpose of a crawl delay directive is to postpone the crawling of a particular website.

Crawl Rate is a parameter in the GSC settings that disables access to your website for a short period of time or for an extended period of time.

Sitemaps

Another command set found in the robots.txt SEO files is the sitemap, which specifies the paths on your website that web spiders can follow and index.

Sitemaps are XML files directly added to the robots.txt files. And are written in text format so that crawlers can read them.

You can either give all of the sitemaps in the robots.txt files, or you can submit the sitemap directly to each search engine’s webmaster tools. However, you must do this separately for each search engine’s webmaster tool.

Therefore, make room for some of the best robots.txt SEO techniques. Because they will provide you more control over how your websites are managed and will give Google bots effective routes.

Robots.txt SEO Best Practices

Content Must Be Crawlable

Your website’s content needs to be pertinent, important, and searchable.

The same material, incorrectly copied codes, and comments in your website’s coding are examples of unnecessary content that makes it difficult for Google bots to crawl, index, and rank your web pages.

To avoid this, keep an eye on the content of your page and the directives placed on a few unreliable and insignificant URLs for Robot.txt SEO purposes.

Use Disallow Directive For Duplicate Content

Duplicate content is a reasonably widespread issue on the internet brought on by geographic and linguistic factors in your website’s URL.

Therefore, to prevent making them crawlable in Robot.txt, you can use the directive, Disallow: to tell Google that those duplicate copies of your web pages are not to be crawled, which prevents this kind of material.

Use Robots.txt Only For Non-Sensitive Information

Information or data that could be exploited to cause financial or other harm to your customers, employees, business, website, or any other party is considered sensitive information or data.

It will not work to hide private user data or other information using the robots.txt file; the data will still be accessible.

Any website’s security is its top concern for this reason.

This occurred because other websites might link to such private files that are permitted to be crawled. The bots now had fresh routes to take as they were crawling

Use Absolute URLs With Case Sensitive Terms

URLs are case-sensitive website components, and you use such URLs in a robots.txt file to specify the pathways that search engine bots may crawl. These URLs are provided after a directive like allow or disallow.

The text of the robot.txt file contains instructions for the Google bot on how to access or stop crawling the route of the specified URL. The problem is crawlers would only heed the instructions if the robots.txt file’s specified path is genuine.

Absolute URLs are necessary to direct Google’s crawling in the correct route. Otherwise, Google becomes confused.

Remember that the directory’s file name must be “robots.txt,” or the crawler will not recognize it and will not comply with your requests.

Checking each URL, including those automatically added, will save you money on unnecessary test runs.

Final Words

Only the websites you want your website to be ranked for are crawled with the help of robots txt SEO. The robots.txt SEO is a convenient but efficient approach to managing search engines’ page analysis when they assess, index, and rank your website.

Do keep in mind that this is not the ONLY factor you need to increase your rankings. Elements like SEO Slugs, Backlinks, Meta Tags, Title Tags, Anchor texts, and other such elements also play a huge role in the SEO optimization of an article. So, make sure you have an SEO specialist agency or SEO person to guide you through all the stages properly.